Samsung is trying to make Bixby better. They do not want to start from the beginning with a new assistant. Instead Samsung wants Bixby to be a face that can use different artificial intelligence systems. This will happen behind the scenes. It will depend on what the user is doing and where they are in the world. Bixby will be, like a door that can open up to different artificial intelligence systems.

There have been some leaks and reports about One UI 8.5. These reports say that Samsung is trying out two artificial intelligence parts from, outside the company. The company is looking at how these artificial intelligence components work with One UI 8.5. Samsung is using these artificial intelligence components to make something new. The leaks and reports are talking about One UI 8.5. What Samsung is doing with it.

Perplexity AI for web-style “answer engine” responses (with citations/sources).

DeepSeek (often discussed in the context of strong reasoning and China-focused deployments) to power richer generative and conversational features—especially the rumored Bixby Live experience.

1) So I was thinking about Samsung. I realized that they are rebuilding Bixby now. I want to know why Samsung is doing this to Bixby. What is the reason that Samsung is rebuilding Bixby now? I am really curious, about what Samsung’s trying to achieve by rebuilding Samsung Bixby.

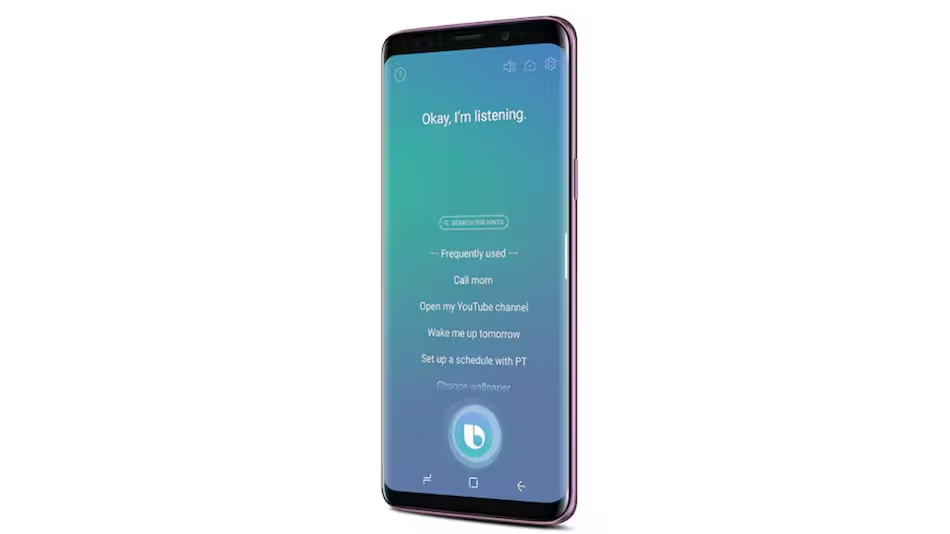

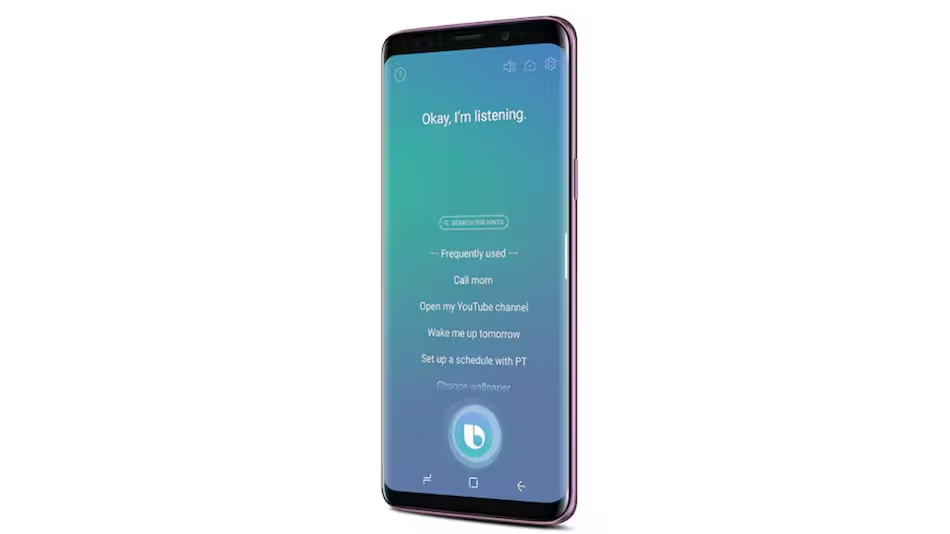

Bixby has always had one cool thing going for it: it can do a lot of things on Samsung phones. It can change settings and modes and routines. It can also use system toggles and Samsung apps.. It can do things that are specific to the device. This is what a lot of people want from a voice assistant, like Bixby. They want to be able to control their Samsung phone with their voice. Bixby can do that.

Over the few years the assistant market has changed a lot. Nowadays people expect assistants to handle things like

* a lot of tasks

* different jobs

The assistant market has really. People now expect assistants to handle many things.

long, messy questions

follow-up context

summarization

writing and rewriting

What is on my screen is something I want to understand. I am looking at my screen. I want to know what it is showing me. Understanding what is on my screen is very important to me. The things that are, on my screen are what I need to understand.

When we have to think about something in a lot of steps it is like we are making a plan. We have to think about what we want to do then we have to compare some things and after that we can do what we want to do. This is what we call -step reasoning, which means we have to think about a lot of things before we can actually do something. We have to plan this compare that. Then we can take action. The process of -step reasoning is, like taking small steps to get to where we want to be and it helps us make good decisions. Multi-step reasoning is important because it helps us think clearly about what we want to do and it makes sure we do not make mistakes.

Those are the exact strengths of modern LLMs and answer engines.

Samsung has a plan and it is important to think about this when we talk about Samsung. They have been telling people a lot about Galaxy AI. They are making many more devices that use Galaxy AI. At the time Samsung is still using Googles Gemini for many things. Samsung is really trying to make Galaxy AI a big part of what they do.

That creates a tension between things it is like the air is filled with this feeling of tension and that creates a tension that’s hard to ignore the tension is all, around us and it is because of that thing that creates a tension.

Google wants the Gemini system to be the brain that is used in all Android devices. The idea is that Gemini will be the default brain for Android. This means that the Gemini system will be the one that is used the most, in Android. Google really wants Gemini to be the brain that runs Android.

Samsung wants to be in charge of how people use their products and keep all the options, for the users of Samsung. This means Samsung wants to control the user experience of Samsung and make sure users of Samsung have choices.

People want the answer and they want it quickly. It does not matter which model gives them the answer. They just want the answer from any model that can provide it. The best answer is what people are looking for. They want to get it fast, from any model.

Samsung is going with a plan, for One UI 8.5. They want to keep using Bixby as the way to talk to the device and control it.. For the really smart parts they are going to use things from other places. They will. Match these smart parts with Bixby. So One UI 8.5 will still have Bixby. It will also have some other smart things added to it. Samsung is keeping Bixby as the interface but they are getting the smart ideas from somewhere else.

2) What is actually being reported in the One UI 8.5 leaks? The One UI 8.5 leaks are talking about some features. People are saying that the One UI 8.5 leaks have some information about the update. The main thing is what the One UI 8.5 leaks are telling us about the changes in the One UI 8.5. The One UI 8.5 leaks are giving us some ideas, about what to expect from the One UI 8.5.

A) “Bixby powered by Perplexity” behavior

People are saying that with the One UI 8.5 if you ask Bixby something that is really hard to answer it will use Perplexity to figure it out. Then it will show you that it used Perplexity and maybe even give you links to where it found the answer. This is what is happening when you ask Bixby a question that needs a lot of knowledge, like a question. One UI 8.5 and Bixby are working with Perplexity to make this happen.

That is a deal because Perplexitys product identity is strongly tied to:

answering with web-backed results

showing sources

acting more like a research/answer engine than a simple chatbot

If Bixby can really do this it will fix a problem that users have been talking about for a long time. People have said that Bixby is good at turning settings on and off but it is not good at answering questions like Gemini or ChatGPT. This is something that Bixby users have wanted for years. If Bixby can answer questions, like Gemini or ChatGPT it will be a deal. Bixby will be able to do things that users need it to do.

B) “Bixby Live” and real-time conversation

The exciting part of the leak is the idea of a Bixby Live mode. It looks a lot like Googles “Gemini Live”. This is a way of talking to your device. It is like having a conversation. The Bixby Live mode can respond to what’s happening on the screen. It might even be able to respond to what the camera sees one day. The Bixby Live mode is a deal because it is always, on and ready to talk to you.

So there are these screenshots that got leaked and some websites wrote about the firmware. They say that Bixby shows up as a window that floats on the screen. It is not as annoying, as those assistants that take up the screen. Bixby has some options like:

continuing a multi-turn conversation

copying responses

rating results (like/dislike)

tapping Sources to open supporting info via Perplexity

C) “Circle to Ask” (Samsung’s spin on Circle to Search)

So there is this feature that people are talking about it is called Circle to Ask. This is like a way that you can use to interact with things on the screen. You can highlight something on the screen. Then ask questions about the Circle to Ask feature. It is pretty similar to how the Circle to Search feature works with the Circle to Search tool. You use Circle to Ask to get information, about something.

So it seems that Samsung has an idea of what they want to do. Even if they change the name or the way the user experience looks the main thing is that they want to make things work in a way. They want to have a conversation and understand the situation not just have you give a voice command and get a result. Samsung wants to make it so that you can have a conversation and they can understand what is going on and then give you what you need. This means Samsung is looking for something, than just giving a command and getting an answer. Samsung wants to make context and conversation a part of how you interact with them.

D) Third-party integrations (travel, rides, weather, maps)

Some people talking about this leak say that it can work with services like weather forecast people, map services, travel services or taxi services. The exact companies they are working with are not the same in every report. The main idea is that the assistant is, like a helper that can get information and help you do something with that information. The assistant becomes a helper that can get stuff and help you use it like the weather or maps or travel or taxi services.

This is what is happening with assistants everywhere: they are not just answering questions. They are also doing things. Assistants are changing and they are not just answering assistants are doing.

3) Where DeepSeek fits in and why Samsung would use DeepSeek

The thing you said about Bixby on One UI 8.5 that it is supposed to be powered by DeepSeek is being talked about as a brain that uses artificial intelligence and it will work with Perplexity not take its place completely. Bixby, on One UI 8.5 will have this brain that uses DeepSeek to help it.

I do not understand why Samsung would use DeepSeek. Samsung already works with Google. Samsung might also integrate Perplexity. So why would Samsung use DeepSeek all? Samsung is already working with Google and Samsung might use Perplexity. I just do not get why Samsung needs DeepSeek.

A) Multi-model strategy (best tool for each job)

Think of Samsung building an assistant like a smartphone camera system:

ultra-wide lens for one scenario

telephoto for another

main sensor for most situations

When it comes to intelligence Samsung has a few options to consider:

an “answer engine” for web Q&A (Perplexity)

a conversational model for real-time dialogue (DeepSeek in some regions, potentially other models elsewhere)

an on-device model for privacy-sensitive tasks or offline use

I think Gemini is really useful when you want to combine Android and Galaxy intelligence tools in a seamless way especially in places where those two companies work together really closely. The Gemini system is great for integrated Android and Galaxy AI workflows, which is perfect for markets where that partnership is strongest, like the Android and Galaxy AI workflows that Gemini supports.

B) China realities and regional AI ecosystems

Samsung works around the world including places where Google services are not allowed or not very popular. In these places having Gemini everywhere is not the answer, for Samsung. Samsung needs to think about this when it comes to Samsung products and services.

People have been talking about DeepSeek. What it means for Samsung. They are looking at how it works with things Samsung has and they are thinking about China when they do this. They want to know how DeepSeek will affect Samsung in China.

If Samsung wants one Bixby experience all over the world it may need to have different systems in the back for different regions. This is because Samsung wants the Bixby experience to be the same for everyone no matter where they are in the world and that means having backends for different areas like different countries or parts of the world to make sure the Bixby experience is the same, for the Samsung users.

I am thinking about Perplexity and where it works well with its web answering feature. This is the place where Perplexity fits in and is allowed to be used. Perplexity is useful when it is available and permitted to provide answers on the web.

I think DeepSeek is an option when it is easier to use in some places or when it is allowed by law. It also works well in certain situations for the kind of experience we are trying to have with DeepSeek. Sometimes DeepSeek does a job when we use it in the right way, for the DeepSeek target experience.

other partners as needed

The Bixby Live feel needs to have real-time generation. This means the Bixby Live has to be able to make things happen away. The Bixby Live needs to be fast and make things feel like they are happening in time. This is important for the Bixby Live to work well. The Bixby Live feel has to be good, at generating things.

A live, flowing assistant needs:

low latency

stable multi-turn memory

strong instruction following

good summarization and rephrasing

robust reasoning for “do X, then Y, then Z”

The leaks say that Samsung wants to make something like Gemini Live. They want it to be similar, to Gemini Live. This means Samsung is trying to give people a Gemini Live experience.

So the idea of DeepSeek being used for that part at least in some versions makes sense. Samsung wants Bixby to feel up, to date. They want DeepSeek to help make DeepSeek and Bixby feel like they are technology.

4) What Perplexity brings that traditional assistants often do not bring is a way of doing things. Perplexity is different from assistants in many ways. Traditional assistants do things but Perplexity does these things and more. Perplexity brings a lot of ideas that traditional assistants often do not have. The things that Perplexity brings are very useful. People like them. Perplexity is a tool that helps people in many ways. The main thing that Perplexity brings is an better way of getting things done. Perplexity is very good, at helping people. It does a great job.

Perplexitys brand is really connected to giving answers and also showing where these answers come from. When a system is able to show people where it got its information it makes a difference in how much users trust it. Perplexitys brand is, about providing answers and sources which is something that users find very helpful.

You can check where the information came from to make sure it is correct. The information is something that you can look into and see where it originally came from.

If you want to know more, about this you can open the source. Read more about the source.

The assistant is really good, at helping with research it is not something that talks a lot. The assistant is a research helper. That is what it does.

People are talking about Bixby. How it handles tough questions. It seems that Bixby is giving these questions to Perplexity. Bixby is still the assistant that people talk to but Perplexity is the one that provides the answers from the internet. This is how Bixby and Perplexity work together. Bixby is like the face of the operation and Perplexity is the one that does the lifting, in the background. When you ask Bixby something it asks Perplexity for help and Perplexity uses the internet to find the answer.

This is similar, to what Apple did when they combined “Siri” and “ChatGPT”. Apple does not try to make “Siri” seem like a great chatbot on its own. Instead “Apple” sends questions to “ChatGPT” when “Siri” needs help. This way “Siri” can still be useful even when it cannot answer something by itself.

Samsung seems to be doing something but they are doing it with a lot of other companies, not just one. They have partners that they are working with. Samsung is trying to do things a little with these multiple partners.

5) Let us think about how this could work when we try it out. We need to think about the steps that we will take when we do this. What is the actual process that we will follow to make this work? We should think about the flow of this and how it will really happen.

Here is a simple way that Samsung could do this:

Scenario 1: Device control (classic Bixby strength)

User: “Turn on hotspot, set it to 5GHz, and enable battery saver.”

Bixby uses the application programming interfaces that are made by Samsung and the system hooks that Samsung has. The internal application programming interfaces and system hooks that Bixby uses are, from Samsung.

A small model that is on the device might be able to help figure out what the user wants to do with the device. This small on-device model could be useful for understanding the intent of the user when they are using the device. The small, on-device model is something that could make the device easier to use.

No need for Perplexity or DeepSeek unless the command is complex.

Scenario 2: Web question

The difference between an Exchange Traded Fund and a mutual fund is that an Exchange Traded Fund is a type of investment that can be bought and sold on a stock exchange.

Exchange Traded Funds are pretty flexible because you can buy and sell them anytime during the day.

On the hand mutual funds are bought and sold at the end of the day.

When it comes to beginners Exchange Traded Funds are often a choice because they are easy to understand and you can start with a small amount of money.

Some people like Exchange Traded Funds because they offer a lot of variety so you can choose an Exchange Traded Fund that invests in much anything you want.

Mutual funds are also an option for beginners but it really depends on what you are looking for.

For people who are just starting out it is an idea to do some research on both Exchange Traded Funds and mutual funds to see which one is the best fit for you.

You should consider things, like how money you have to invest and what your goals are.

Exchange Traded Funds and mutual funds can both be good choices it just depends on your situation and what you want to achieve with your investments.

Bixby detects “knowledge question.”

Routes to Perplexity.

Returns a structured answer with citations/sources button.

Scenario 3: Live conversation + context

I am looking at this message. I need help to write a polite reply to it but I want to keep the reply very short. The message is what I am looking at. I want the reply to the message to be brief.

I just want a reply, to the message that is not too long.

The Bixby Live feature opens up in a window that floats on the screen. This floating window is where you will find Bixby Live.

The model that is called “live” which some people think is DeepSeek comes up with options fast.

When the user asks for something, like ” add a sentence explaining the delay” it is able to keep the conversation going and make the necessary changes. The system keeps the context of the conversation and adapts to what the user needs in this case the user needs a sentence explaining the delay.

Scenario 4: On-screen understanding (“Circle to Ask”)

The user is looking at a picture of a product. They want to know if the price is good. They are asking to find products to compare prices. The user wants to see options that are like the product, in the picture. Is the price of this product a deal? Can we find something to this product?

Bixby is able to get the things that’re on the screen. The Bixby feature extracts the content that you see on the screen. This means Bixby can take the on-screen content and do something with it. The Bixby tool is really useful for getting the information from the screen. Bixby extracts, on-screen content which’s very helpful.

Routes comparison/search to Perplexity (or another service).

The website gives you a summary of the results. It also tells you where it got the information, from so you can check the sources for yourself.

So basically Bixby is in charge. When a user talks to Bixby Bixby figures out which engine is the one to respond to the user. Bixby is like the person who decides what happens next. The user has a conversation, with Bixby. Then Bixby chooses the right engine to answer the users question. This way Bixby is the one making the decisions and the user only needs to talk to Bixby.

6) How this changes Samsungs relationship with Google and the Gemini project, at Google and what this means for Samsung and Google with the Gemini project.

Samsung and Google work closely together on Android. Samsung has been showing off features that use Gemini as part of the Galaxy AI. This means that Samsung and Google are connected in a way when it comes to Android. Samsung is using Gemini to make Galaxy AI better.

So why bring in Perplexity/DeepSeek?

Because assistants are a strategic battleground:

If Google is the artificial intelligence brain then Samsung risks becoming the hardware that only works with Google artificial intelligence.

If Samsung is, in charge of the layer and they can change the backends then Samsung has the power. Samsung keeps the hand because Samsung can control the assistant layer and swap out the backends as they see fit which means Samsung stays in control.

So this does not mean that Samsung is giving up on Gemini. The news from Reuters, about Samsungs plans to make artificial intelligence devices still says that Gemini is a big part of what makes the artificial intelligence features on Galaxy devices work. Samsung is still using Gemini to make Galaxy artificial intelligence features.

The more likely outcome is:

Gemini is really important for a lot of Galaxy AI workflows. The thing, about Gemini is that it stays important for many Galaxy AI workflows because people use Gemini to do these things. Gemini helps with Galaxy AI workflows. That is why Gemini is so important.

Bixby is getting some features thanks to its partner companies. These companies are helping Bixby get better at answering questions and having conversations with people. Bixby will also be able to do live chat, which’s really cool. This means Bixby will be able to talk to people and answer their questions in time like a real person. Bixby and its partner companies are working together to make Bixby a better tool, for Q&A and live chat.

People see one assistant. What is going on behind the scenes can be different. The backend can change because of a feature or the region someone is in or what the user likes. The assistant experience is the same for users. The backend can be different, for each user.

The idea of using a lot of brains to help with things is getting popular. This is because one model is not good at everything. For example one model might be good with cost. Not with speed. Another model might be good with policy. Not, with region or privacy or latency. So people like to use models, which is called a “multi-brain assistant” approach.

7) The story of Samsung and Perplexity did not begin recently. The Samsung and Perplexity story has a history. It is interesting to look at how Samsung and Perplexity have developed over time. The Samsung and Perplexity story is really something that has been going on for a while now.

The One UI 8.5 rumors tell a story. It all started back in mid-2025. That is when Bloomberg said that Samsung was talking about using Perplexity. They wanted to add Perplexity to the things that Samsung makes like Bixby and other Samsung experiences. The One UI 8.5 rumors and Perplexity are connected in a way. Samsung is really interested, in Perplexity and The One UI 8.5 rumors show that.

Other people are talking about Perplexitys plan to be the assistant that comes with major phones. Perplexity wants to be the default assistant on these phones. They really want to work with Samsung because Samsung’s so big. Samsung has a lot of phones there so it would be a great partner, for Perplexity.

The One UI 8.5 leaks do not seem like something that just happened. They look like the One UI 8.5 leaks are just a small part of something bigger that the companies have been discussing for a long time. The One UI 8.5 leaks are, like the tip of the iceberg. There is probably a lot more going on with the One UI 8.5 that we do not know about yet.

8) Privacy and how companies handle our information is a big deal and users need to understand why it matters to them. Users should care about what happens to their data because it is their own information. The way companies handle this data can affect the users directly. Privacy and data handling are issues that users should pay attention to. Users need to know why they should care about their privacy and the way their data is handled by these companies.

Any time an assistant can see:

What is on my computer screen now is a bunch of different things. I have a windows open including this conversation with the user and my email inbox. The user asked what is on my screen. My screen is showing all the things I am working on like the conversation with the user and some other tasks. The user wants to know what is, on my screen.

What the person is typing

You are asking the thing over and over again. What is it that you want to know about this question that you keep asking? The thing you are asking repeatedly is something that you seem to be really interested, in. You are asking this question repeatedly.

What your camera sees is something that could happen in the future. Your camera may see things that’re not possible now but they could be possible one day. The things that your camera sees in the future are going to be really interesting. Your camera is going to play a role, in showing us what the future holds. We just have to wait and see what your camera sees.

Privacy and data governance are really important now. They become the focus. Privacy and data governance are what matter most.

People will want to ask some questions when Samsung tells us more, about this. What are the key things that users should ask Samsung when they confirm the details? Key questions that users need to ask are very important. When Samsung confirms the details users should ask these questions.

So what happens on my device. What happens on the cloud? I want to know what is processed on my device and what is processed on the cloud. The main thing I am trying to figure out is the difference between, on-device processing and cloud processing. What is done on my device versus what’s done on the cloud?

So when does a query actually get sent to Perplexity or DeepSeek? This happens when the query is not simple. The system looks at the query. Thinks it is complicated. Then it sends the query to Perplexity or DeepSeek to get an answer.

The query gets routed to Perplexity or DeepSeek when it needs thought. Perplexity or DeepSeek can do a job of figuring out what the query means and finding the right information.

Users have the option to opt out of third-party routing. This means that users can choose not to have their information go through third-party routing. The question is can users really opt out of third-party routing. Users want to know if they can say no to party routing. So the answer is that users do have a choice when it comes to third-party routing.

Are conversations stored, and where?

So when we talk about Sources does that mean it is like browsing the web in time?. Does that mean everything we do, on Sources is being logged by them I mean by Sources?

Now we do not have Samsungs final policy language for One UI 8.5 because this is based on leaks and reports.

Users of One UI 8.5 should expect that Samsung will provide toggles for One UI 8.5 that’re similar to other artificial intelligence features.

These toggles for One UI 8.5 will likely include things like data sharing for One UI 8.5 cloud processing for One UI 8.5 and personalization, for One UI 8.5.

It is also worth noting that being able to show sources like Perplexity does is really good for being transparent.. It also means that the system has to go out and get information from the web, which is a different way of handling privacy than when the system just works offline with the information it already has. The ability to show sources is great. The web retrieval behavior that comes with it is something to think about when it comes to the privacy profile of the system. The sources that the system shows are important for transparency. The way the system retrieves information, from the web is a key part of its privacy profile.

9) The TV angle: Samsung is already doing something with Bixby that’s like making new things you know, generative Bixby in other places with the Samsung Bixby, the Bixby, from Samsung.

One reason these One UI 8.5 rumors seem like they could be true is that Samsung has already done something with Bixby on their TVs. They made Bixby more like a conversation, on these TVs. This makes the One UI 8.5 rumors feel more believable because Samsung is already using this kind of Bixby in another area, which is their TVs and now people are talking about One UI 8.5.

The Verge said that Samsung is coming out with a Samsung Bixby experience that uses generative artificial intelligence in its 2025 TV lineup. This new Samsung Bixby experience can do things like answer questions about what’s on the screen and give people more ideas about what to watch. Samsung used artificial intelligence technology, including Perplexity and also Microsoft Copilot to build this new Samsung Bixby experience, for the TV.

So Samsung has done this before: they have Bixby. They also work with other artificial intelligence like partner AI and this is not just an idea anymore Samsung has actually done it with Bixby and partner AI.

10) What this could mean for the users the real benefits that the users will get from this is something that we should think about what the users will actually gain from this the benefits, for the users.

If these changes actually become available, to everyone and not just as secret test features, the good things that can happen are really big:

A) Bixby is really helpful when you need to do more than just change settings on your phone. This means Bixby can assist you with things than just giving commands to change your settings. For example Bixby can help you with Bixby tasks that go beyond just telling it to change your phone settings. So Bixby is useful for Bixby things, like that.

Historically:

“Turn on Bluetooth” ✅

“Explain this topic and give sources” ❌

With Perplexity-style answering:

“Explain + cite sources” ✅

B) Less app-switching

People are jumping back and forth between:

assistant

browser

notes

search results

messaging apps

A window that floats around with Bixby Live can see what is on the screen. This Bixby Live can help make things easier for us. We will have trouble with Bixby Live when it can read what is, on the screen.

C) Better “agent” workflows

If other services like travel and rides and maps really work together with the assistant then the assistant becomes like what we want it to be. The assistant becomes closer to being really helpful with things, like travel and rides and maps.

“Plan my commute and book the ride”

“Find flights and compare prices”

I want to know what the weather is like where I’m going. What is the weather like at my destination? I need to know the weather, for the place I am going to.

11) What are the things that could go wrong with this what are the realistic risks that we should think about when it comes to this situation the things that might actually happen and cause problems, with the project what’re the realistic risks of this plan what could go wrong with this plan.

A) Fragmented experience

If Bixby answers one way for device commands another way for web questions and another way for live chat. Users might feel inconsistency in Bixby. This is because Bixby is giving answers for different things. For example when users ask Bixby something about their device Bixby gives one kind of answer.. When users ask Bixby a question about something on the web Bixby gives a different kind of answer.. When users talk to Bixby using live chat Bixby gives another kind of answer. This can be confusing, for users because Bixby is not being consistent. Users might think that Bixby should answer in the way every time no matter what kind of question they ask or how they ask it.

tone

accuracy

formatting

memory/context

permissions

Samsung needs something that can really bring everything together. They need a thing that organizes all the different parts so it feels like you are talking to one person, not three different people who are trying to work together. Samsung needs this to make it feel like one assistant, not three things that are stitched together.

B) Partner availability and regional limits

Perplexity or DeepSeek features could be:

limited to specific devices

limited to certain regions

behind beta toggles

dependent on legal/partner agreements

People should think of the information that is leaking out now as a general idea of what might happen not as something that is definitely going to happen. The current leaks should be seen as directional they are not guaranteed to be true.

C) Accuracy and hallucinations

Even with sources, AI can still:

misinterpret questions

cite weak sources

summarize incorrectly

The best way to defend something is to do what systems like Perplexity do. They tell you where they got their information from. This way users can check the sources for Perplexity-style systems. See if the information is true. Perplexity-style systems are really good, at showing users where they got their information so users can verify it for Perplexity-style systems.

12) So I want to know when we can expect One UI 8.5 to come out and also which phones are going to get One UI 8.5.

People are talking about One UI 8.5 because of the leaks and all the news, about it. One UI 8.5 is being tested now. It is also connected to the big update. Some people think One UI 8.5 will come out around the time of the flagships in early 2026.

So Samsung can change when they finish rolling out updates and which devices are eligible, for them. Usually:

When new flagship models are launched they are the ones that get to show off the features first. The new flagship models are always the first to have these features.

Some older devices might get the update on. This can happen because the computer chip, inside the device is not as powerful. So the new update might not have all the artificial intelligence features that the newer devices have. The artificial intelligence features might be missing because the hardware of the device is not good enough to support them.

The main thing to look out for is what Samsung does with this. Does Samsung present this as something not? The thing to really pay attention to is how Samsung presents this.

a core One UI feature (broad rollout), or

a flagship-only “Galaxy AI premium” feature (limited rollout)

13) The main thing to remember is what this rumor is really telling us about the situation, with the rumor itself the rumor is what is important here. We need to focus on the rumor.

So even if some things are different like names are changed or partners are different or some things take longer to happen the main thing to think about with the strategy is:

Samsung wants Bixby to become an intelligence center that can do a lot of things for Samsung users. Samsung thinks Bixby should be the artificial intelligence system that people use. Samsung wants people to use Bixby for tasks.

* Samsung wants Bixby to be able to do things for Samsung users

Samsung is working to make Bixby a central part of Samsung products. Samsung wants Bixby to be the intelligence system that people use every day. Samsung thinks Bixby can make life easier, for Samsung users.

control the device deeply, and

answer modern conversational questions competitively, by using best-in-class external AI engines.

Perplexity is really helpful when you need answers from the web and it also gives you the sources. People are talking about DeepSeek. How it can be used to make conversations more interesting and real in some versions of things or in certain areas. Perplexity is great, for finding information on the web and getting the sources you need. DeepSeek is being considered to power conversations that’re more engaging and feel like you are talking to someone live in some builds or regions.

And this is happening while Samsung is still working on making Galaxy AI available on a lot of devices. We are talking about hundreds of millions of devices.. Galaxy AI is still very important with Gemini playing a big part, in the whole Galaxy AI system.

If Samsung executes well, One UI 8.5 could be the first time in years that people stop thinking of Bixby as “the button I remapped” and start thinking of it as “the assistant I actually use.”